Mapping Integration Data to Custom Properties

Integrate tools by sending arbitrary JSON payloads to OpsLevel. Use JQ to quickly and flexibly interpret data in your organization's preferred format.

Custom Integrations each come equipped with a webhook endpoint that allow you to push data to OpsLevel. You can then manipulate this data via Extraction and Transform Configurations to update Custom Properties on existing Component Types.

Rather than relying on pre-defined integrations, this capability enables you to fully customize how data from external tools is incorporated into your catalog.

Why is Customizable Data Mapping Important?

- Flexibility: Map data from various sources (like Wiz, Jira, or Datadog) to OpsLevel in the way that makes the most sense for your organization.

- Faster Integration: Bring in data from tools that OpsLevel doesn't natively support, without waiting for bespoke development.

- Improved Data Visibility: Control where your data is stored and how it's displayed, giving you a clearer picture of your components and systems.

Getting Started

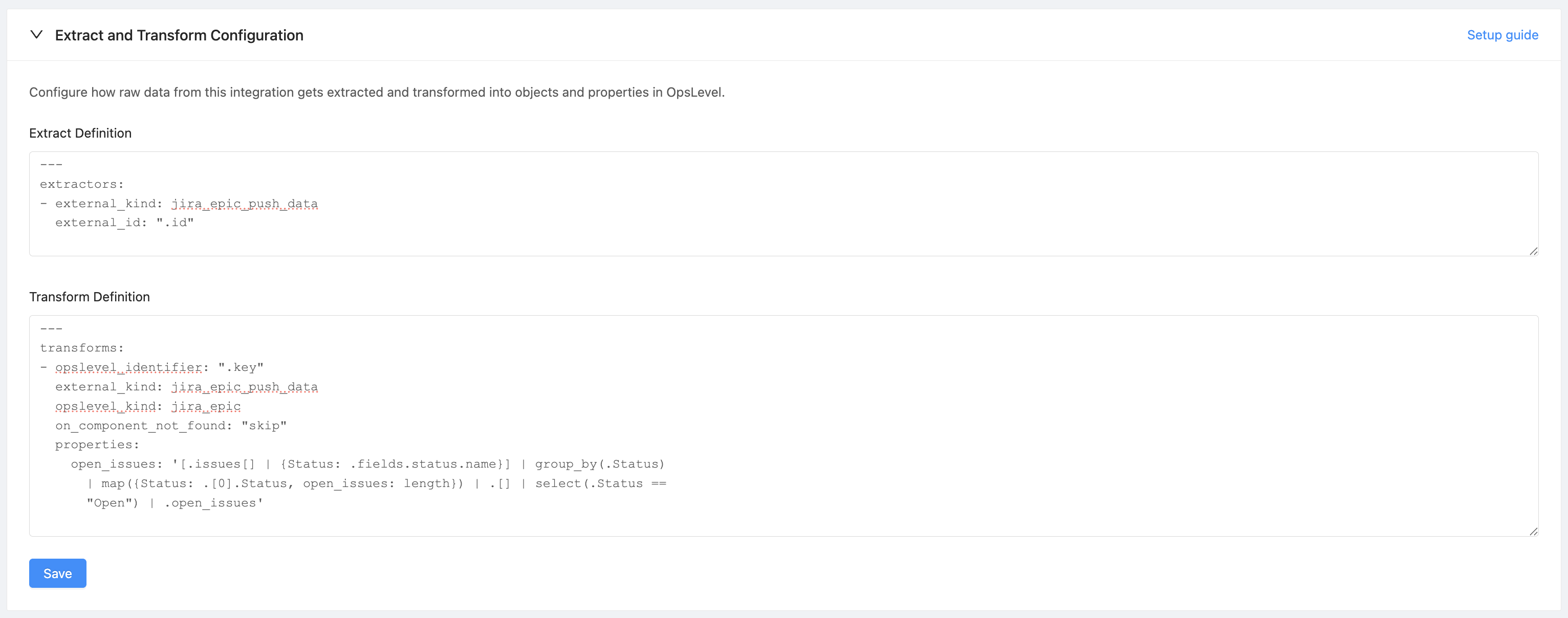

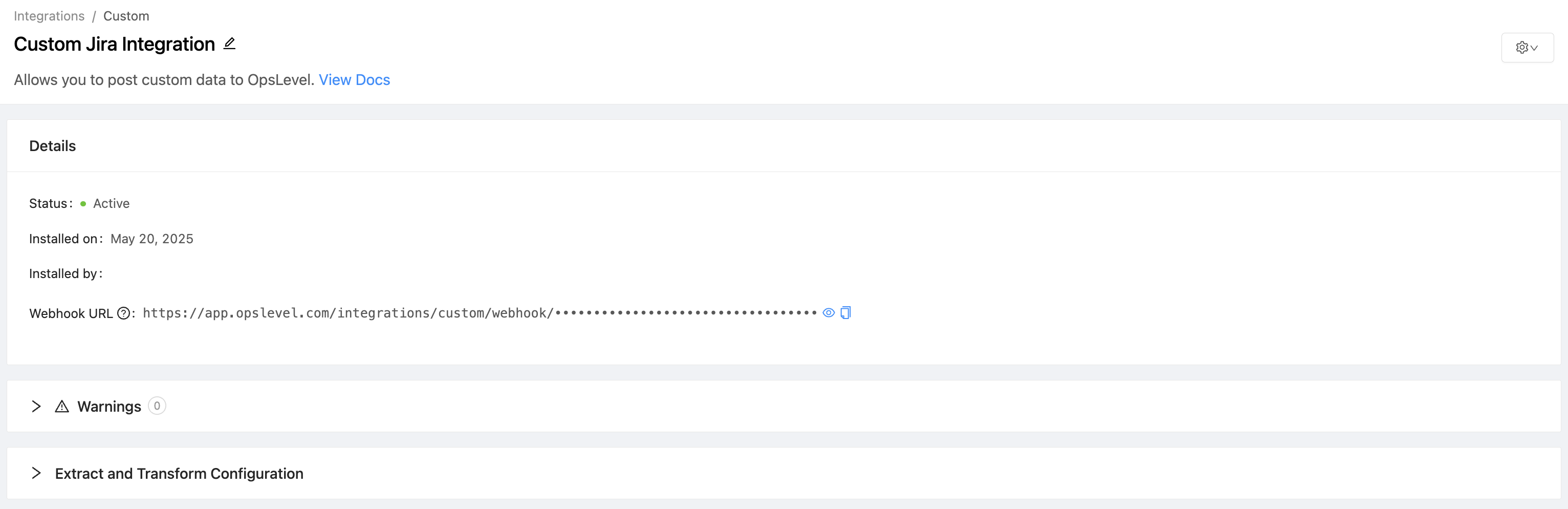

You will need to set up a Custom Integration in order to get started. This can be done through the UI or the API by following the guide found here. The Customizable Data Mapping feature configuration can be found within your Custom Integration, in the Extract and Transform Configuration collapsible section.

Within the integration details card, there is a Webhook URL. This is the endpoint you'll use to push your data to OpsLevel.

The expected structure of the webhook query parameters:

| Keys | Required | Description |

|---|---|---|

external_kind | true | A unique identifier string, used by the Customizable Data Mapping feature to identify the type of your data. |

Configuration

The steps below use an example scenario where we want to push a Jira Epic to OpsLevel. Once configured, our example user will send a POST request to the endpoint https://app.opslevel.com/integrations/custom/webhook/••••••••••••••••••••••••••••••••••?external_kind=jira_epic_push_data, including the following data:

{

"key": "APA-1123",

"id": "10000000",

"issues": [

{

"fields": {

"status": {

"name": "Open"

}

}

},

{

"fields": {

"status": {

"name": "In Progress"

}

}

}

]

}Extraction Definition

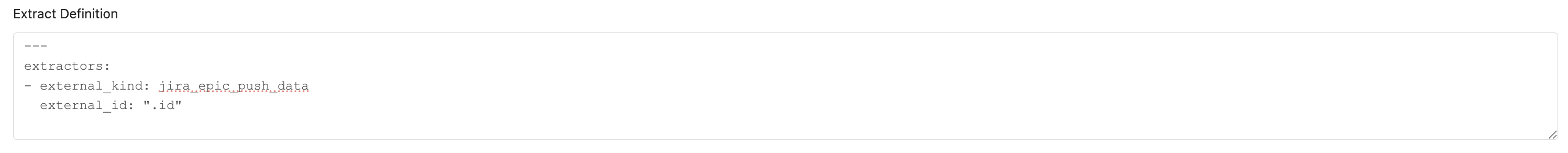

You will have to define an Extraction Definition within the Custom Integration in order for OpsLevel to store any data received via the integration. The Extraction Definition is written in YAML, and specifies how data sent to the Custom Integration will be stored for use in the transformation phase of the integration.

The expected structure of the Extraction Configuration is as follows:

---

extractors:

- external_kind: jira_epic_push_data

external_id: ".id"

Note: theexternal_idyou select must consistently refer to the same object across payloads in order to ensure mappings are processed correctly.

| Keys | Required | Type | Description |

|---|---|---|---|

external_id | true | string | A JQ expression used to select the value that uniquely identifies the object in the external tool. For event payloads without a repeatable identifier, you may want to use the same value as for the opslevel_identifier transform configuration below. |

external_kind | true | string | A unique string used to identify the data type. It is matched to the external_kind webhook URL parameter in order to determine which extractor to use. |

exclude | false | string | A JQ expression that will exclude the given object from processing if it evaluates to true. |

expires_after_days | false | integer | An integer number of days. Objects which have not received an updated payload within the specified number of days will be automatically deleted. |

http_polling | false | object | This object defines how OpsLevel should poll an endpoint for data when users prefer polling over webhooks. Instead of using the webhook that comes with your integration to push data to OpsLevel, this configuration tells OpsLevel how to actively fetch data from your endpoint. |

http_polling.url | true | string | The url OpsLevel will poll daily. This property can be dynamically set using liquid templating and has access to OpsLevel secrets . |

http_polling.method | true | string | The HTTP method to be used to retrieve data from the provided url. |

http_polling.for_each | false | string | Enables chained data collection by iterating through records from a previously defined external kind. Example |

http_polling.headers | false | array | An array of objects describing the headers to be included in the request OpsLevel will make to your endpoint. This property can be dynamically set using liquid templating and has access to OpsLevel secrets . |

http_polling.headers.name | true | string | The name of the header. |

http_polling.headers.value | true | string | The value of the header. |

http_polling.body | false | string | A string that represents a JSON body. |

http_polling.errors | false | array | An array of objects describing specific error scenarios that may be encountered when OpsLevel polls for your data. Any error not explicitly listed and handled here is retried. |

http_polling.errors.status_code | true | integer | The status code used to identify an error. This code is used to filter errors that you may want to assign a specific handler to. |

http_polling.errors.handler | true | enum | One of either no_data or rate_limit. When an error occurs that is handled by no_data, OpsLevel treats it as no response. When an error occurs that is handled by rate_limit, OpsLevel retries with a backoff. |

http_polling.errors.matches | false | string | A JQ expression used to further filter error messages that have passed the status_code filter above. |

http_polling.next_cursor | false | object | Used to identify that a paginated response will be returned and from where in the response to get the pagination information. OpsLevel currently supports cursor pagination. This value is accessible in the http polling url with {{ cursor }}. |

http_polling.next_cursor.from | true | enum | One of either header or payload. Indicating the origin of the pagination information. |

http_polling.next_cursor.value | true | string | A JQ expression used to parse the aforementioned origin for the cursor. |

iterator | false | string | A JQ expression used to select a payload field to iterate over. If this key is specified, it will create individual objects within OpsLevel for each item returned, and all the other extractor configuration will apply per-returned-object. If this key is not specified, the entire data payload will be treated as a single object |

Our user has defined a jira_epic_push_data kind, whose id field uniquely identifies the object in the external system.

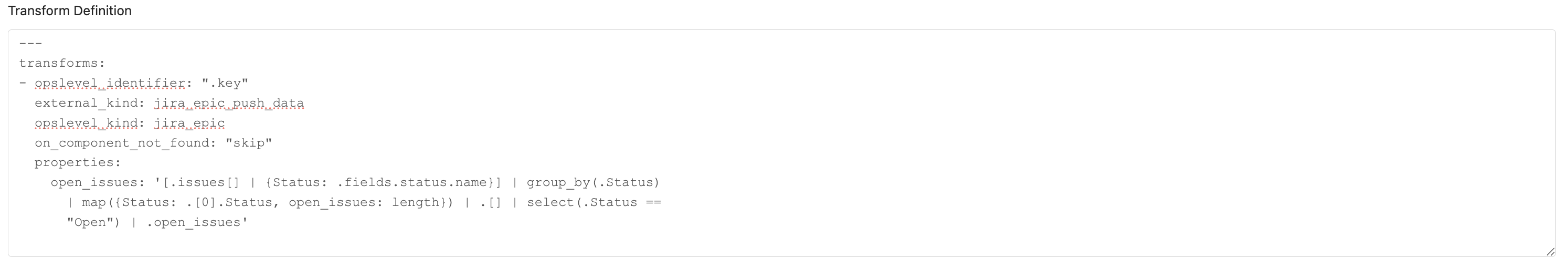

Transform Definition

Once the data has been extracted and stored, a Transform Definition must be created to manipulate OpsLevel objects with the data. The Transform Definition is written in YAML, and defines how extracted data is to be mapped to OpsLevel Component Types and Teams.

The expected structure of the Transform Definition is as follows:

Keys | Required | Description |

|---|---|---|

| true | A unique identifier string, used to select the extracted data to transform. This should match an |

| true | A string used identify the type of component that this transform will update. This should match the alias of a Component Type or be equal to "team". |

| true | A JQ expression used to select an identifier from the extracted data. The result of this expression should match the alias of an existing Component or Team. |

| true | An enum describing the action OpsLevel should take when the given |

| false | A list of key-value pairs, where each key is the identifier of a custom property on the component type within OpsLevel. The value should be a JQ expression, which will be evaluated against the extracted data. |

| false | A list of key-value pairs, where each key is the identifier of one of the default component properties within OpsLevel. The shape of the value depends on the specific property. Currently only |

| false | An array of JQ expressions. The output should be a |

| false | This will add links to the repositories found by the |

| false | An array of JQ expressions, each of which will be evaluated against the extracted data. The output of each JQ expression can be a single object of the shape |

| false | This will attach the tools to component, which can be found in the Operations tab. The format is an array of JQ expressions. The output should be either a single object, or an array of objects in the shape of: |

A sample Transform Definition can be found below.

---

transforms:

- external_kind: jira_epic_push_data

opslevel_kind: jira_epic

opslevel_identifier: ".key"

on_component_not_found: "skip"

properties:

open_issues: "[.issues[] | {Status: .fields.status.name}] | group_by(.Status) | map({Status: .[0].Status, open_issues: length}) | .[] | select(.Status == \"Open\") | .open_issues"Currently, this feature exclusively supports existing Component Types and Teams within OpsLevel. In order for your Transform Definition to take effect, please follow the linked Component Types and Teams setup guides.

Our user has created a Transform Definition that will update ajira_epic Component Type. The open_issues property will be updated with a count of all the issues with status "Open". The transform will be applied to all pushed data of the jira_epic_push_datakind.

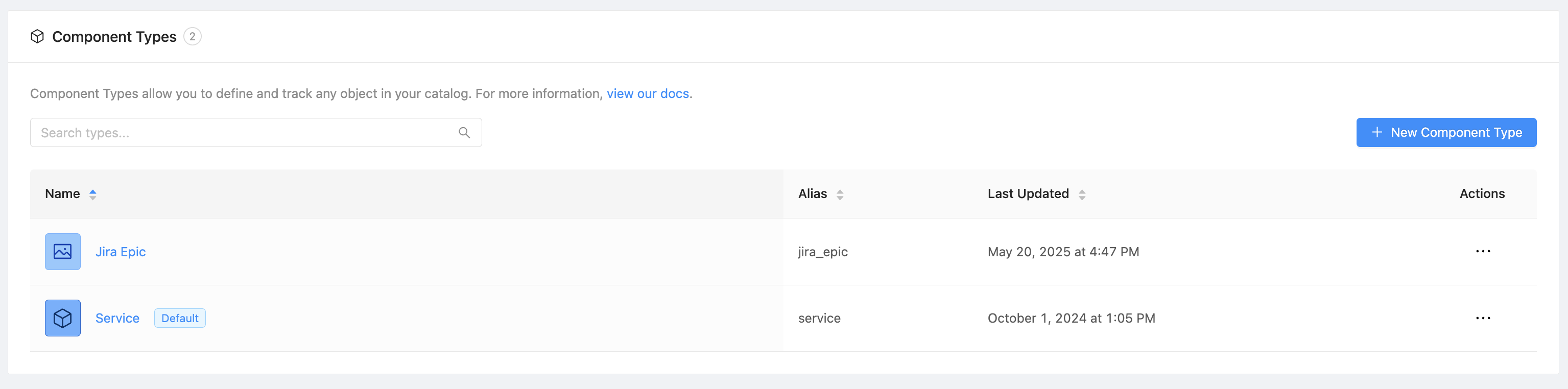

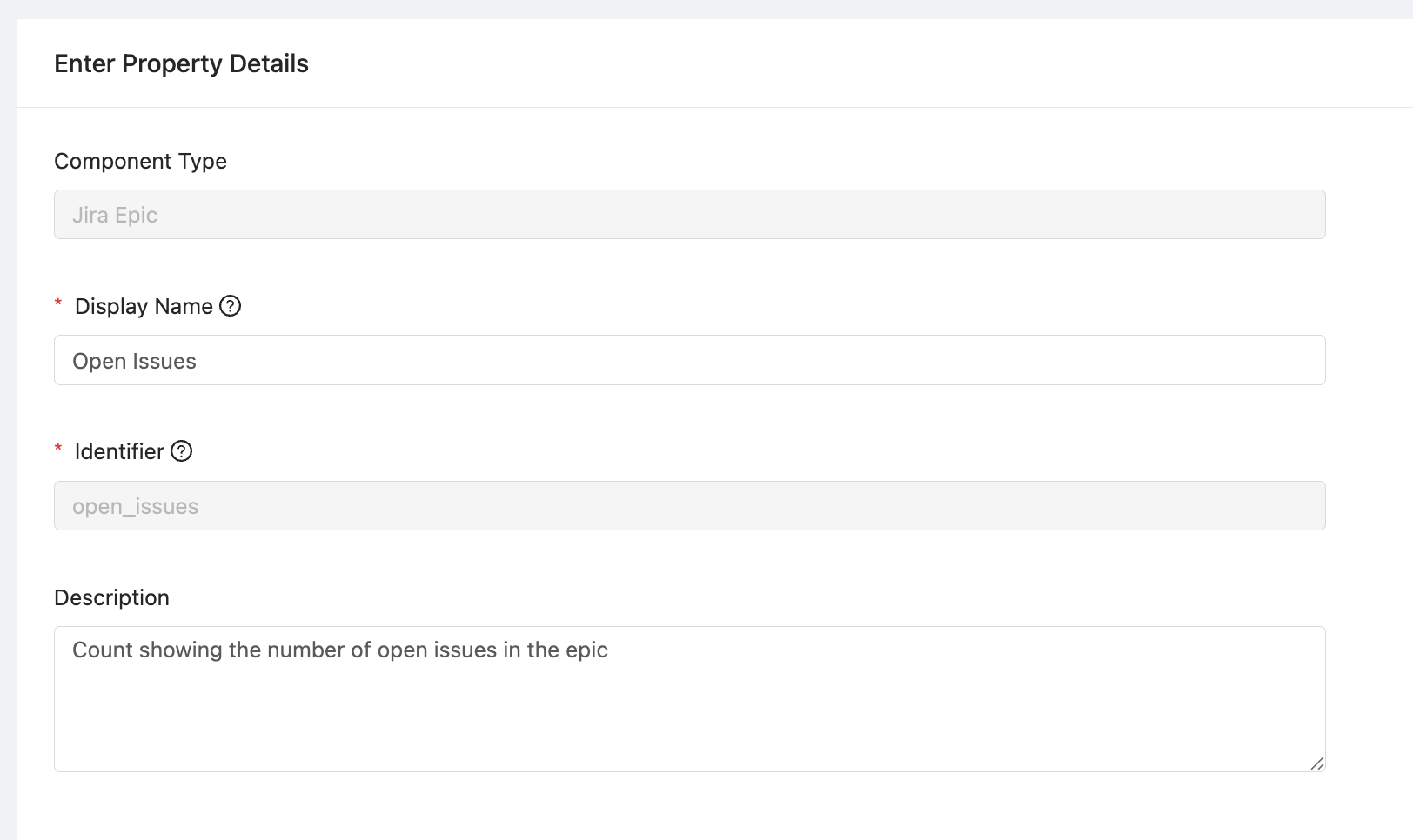

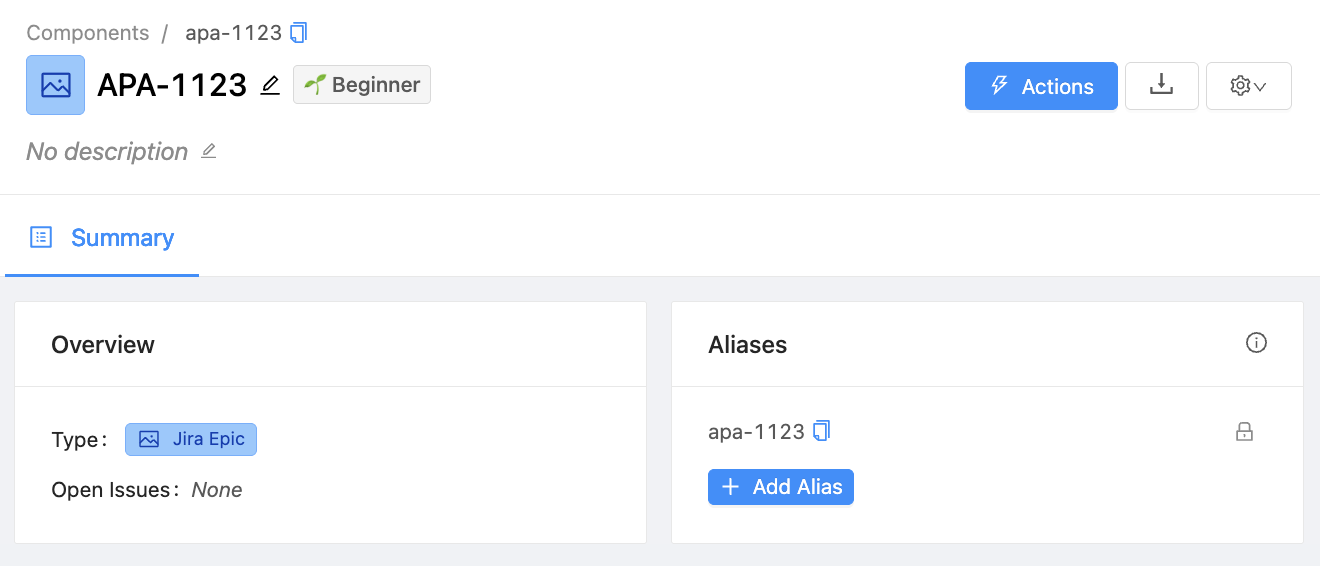

Our user has setup the requisite data as seen below. Notice the following:

- The alias of the "Jira Epic" matches the

opslevel_kindin the Transform Definition - The identifier of the Custom Property is

open_issues, which matches the property in the Transform Definition - An existing "Jira Epic" Component has been created with the alias

apa-1123, matching the result of theopslevel_identifierJQ expression when evaluated against the JSON shown above.

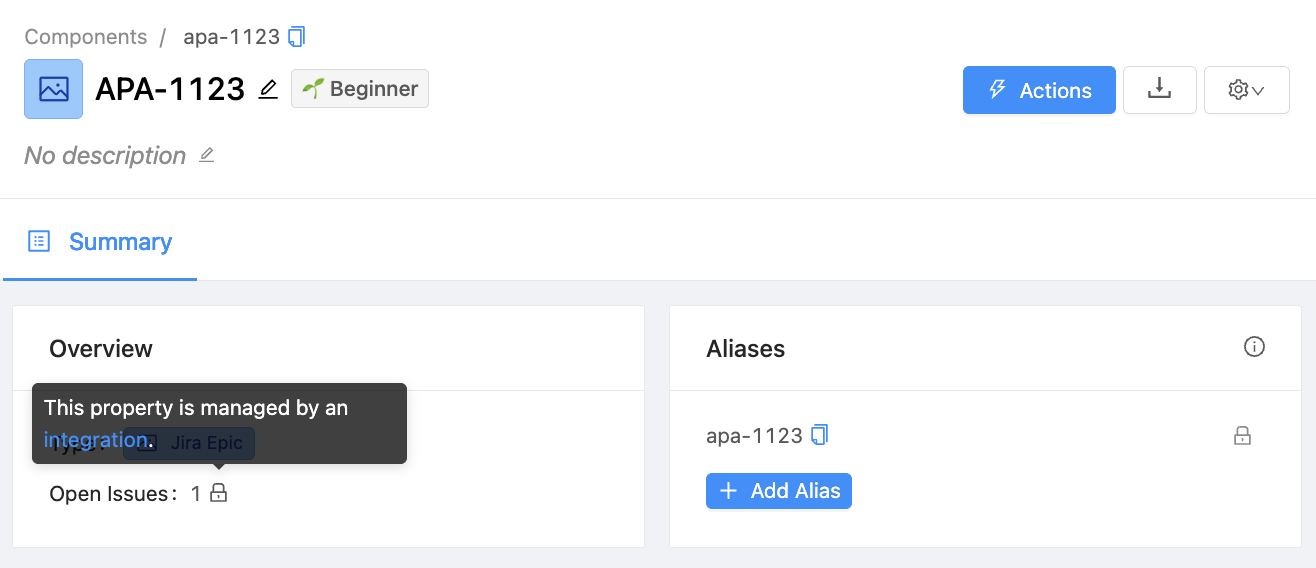

Putting it all Together

Once your Extraction and Transform Definitions have been saved, you may now push your data to the webhook endpoint shown in the details card.

Our user will be making a POST request to the endpoint https://app.opslevel.com/integrations/custom/webhook/••••••••••••••••••••••••••••••••••?external_kind=jira_epic_push_data.

Once the pushed data has been extracted and transformed, the Custom Property on the specified Component will be updated. A tooltip will appear to indicate that the property is managed by an integration.

Updated 2 months ago