Kubernetes Integration

Our Kubernetes integration lets you import and automatically reconcile Kubernetes resources in your cluster to OpsLevel services.

With our Kubernetes integration, you can import and regularly reconcile Kubernetes resources into OpsLevel. It’s a fast way to import your microservices into OpsLevel and keep them up-to-date automatically as data changes in your Kubernetes cluster. You can import your Kubernetes resources directly as OpsLevel services, or create service suggestions to map to existing services in OpsLevel.

Installation

brew install opslevel/tap/kubectlFull installation instructions are available in our kubectl-opslevel repo.

Creating OpsLevel service suggestions from Kubernetes resources

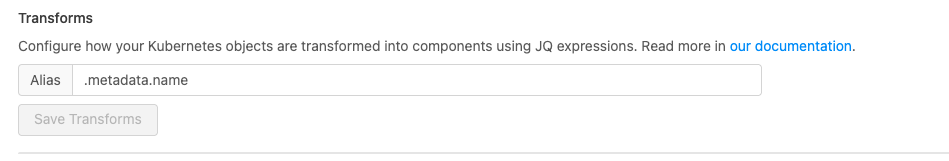

If you want to create service suggestions in OpsLevel, rather than mapping Kubernetes resources directly to OpsLevel services, you can define a JQ transform in your integration configuration. We will use the transform to match service names for suggestions.

Example transforms

The appropriate transform depends on your Kubernetes configuration. Possibilities may include:

- .metadata.name

- "\(.metadata.namespace)-\(.metadata.name)"

- .metadata.labels."app.kubernetes.io/name"

- .metadata.labels."app.kubernetes.io/app"

- .metadata.labels."app.kubernetes.io/component"

- .metadata.annotations."opslevel.com/alias"

- .metadata.labels."app.kubernetes.io/instance"

- .metadata.labels."argocd.argoproj.io/instance"Direct mapping of Kubernetes resources to OpsLevel services

Getting Started

Our integration is built as an extension to kubectl. After installing the extension, the single binary, kubectl-opslevel, can be placed anywhere in your $PATH. After doing so, you can run:

kubectl opslevel ...The next step is to define how to map data between Kubernetes resources and OpsLevel services. This mapping is specified in a configuration file and uses jq as a mechanism to select data from Kubernetes resources.

You can generate a sample configuration that shows a variety of examples with:

kubectl opslevel config sample > ./opslevel-k8s.yamlHere is a demo of a full runthrough of using tool

Preview

You can tweak values in the configuration file and preview their effect before running an actual import to OpsLevel. Similar to a dry-run (or terraform plan), you can always safely run the preview command and see a sample of the output (by default it samples 5 services randomly to display - remember to export or insert your OpsLevel API Token):

OL_APITOKEN=XXXX kubectl opslevel service preview -c ./opslevel-k8s.yamlThis will show you the output of what Kubernetes data would map to OpsLevel services, but will not actually import any data or change your OpsLevel account in any way.

NOTE: this step does not validate any of the data with OpsLevel - fields that are references to other things (IE: Tier, Lifecycle, Owner, etc) are not validated at this point and might cause a warning message during import

You can adjust the number of samples to see in the preview command. For example, specify a sample count value of 10 and it will sample 10 services randomly to display:

kubectl opslevel service preview 10 -c ./opslevel-k8s.yamlYou can see a preview of everything by specifying a sample count value of 0:

kubectl opslevel service preview 0 -c ./opslevel-k8s.yamlImport

Once you are happy with the configuration file, you can import your data into OpsLevel with the following command: (remember to export or insert your OpsLevel API Token):

OL_APITOKEN=XXXX kubectl opslevel service import -c ./opslevel-k8s.yamlThis command may take a few minutes to run so please be patient while it works. In the meantime you can open a browser to OpsLevel and view the newly generated/updated services.

Reconcile

In addition to running the extension as a one time importer, you can also deploy the extension inside your Kubernetes cluster to regularly reconcile your Kubernetes resources with OpsLevel. To simplify this installation, we have a helm chart you can use.

The Configuration File Explained

The tool is driven by a configuration file which describes how to map Kubernetes resource data into OpsLevel service objects. The configuration leverages jq to slice and dice data into the different fields on an OpsLevel service.

Mapping Kubernetes to OpsLevel

Here is a minimal example that maps a Kubernetes deployment’s metadata name to an OpsLevel service name:

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

opslevel:

name: .metadata.nameHere is an example that filters out unwanted keys from your labels to map into a service’s tags (in this case excluding labels that start with “flux”):

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

opslevel:

name: '"\(.metadata.name)-\(.metadata.namespace)"'

aliases:

- '"k8s:\(.metadata.name)-\(.metadata.namespace)"'

tags:

assign:

- .metadata.labels | to_entries | map(select(.key |startswith("flux") | not)) | from_entriesThis example targets Ingress resources where each host rule is attached as a tool to cataloge the domains and url’s used:

service:

import:

- selector:

apiVersion: networking.k8s.io/v1

kind: Ingress

opslevel:

aliases:

- '"k8s:\(.metadata.name)-\(.metadata.namespace)"'

tools:

- '.spec.rules | map({"cateogry":"other","displayName":.host,"url": .host})'Note the usage of aliases. These allow you to map data from mulitple Kubernetes resources into a single service object in OpsLevel.

Service Aliases

Aliases on services are the basis of how deduplication works when reconciling services.

Here’s one example of how to customize the generated aliases:

This example is also generated by default if no aliases are configured so that we can support lookup for reconcilation when you provide no other aliases.

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

opslevel:

aliases:

- '"k8s:\(.metadata.name)-\(.metadata.namespace)"'The example concatenates together the resource name and namespace with a prefix of k8s: to create a unique alias.

Filtering Selected Kubernetes Resources

By default all resources in every namespace matching the apiVersion and kind are included so we allow you to filter out resources using jq expressions that return truthy.

Here is an example filtering out resources in the kube-system namespace

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

excludes:

- .metadata.namespace == "kube-system"

opslevel:

name: '"\(.metadata.name)-\(.metadata.namespace)"'Here we filter out all resources in namespaces that end in dev

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

excludes:

- .metadata.namespace | endswith("dev")

opslevel:

name: '"\(.metadata.name)-\(.metadata.namespace)"'Here we invert the expression in excludes so that we only include things that match

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

excludes:

- .metadata.namespace | endswith("dev") | not

opslevel:

name: '"\(.metadata.name)-\(.metadata.namespace)"'NOTE: we still query your Kubernetes cluster for resources that match the given apiVersion and kind in all namespaces

The excludes filter is an if any are true type of check so you can stack multiple checks to catch different aspects about the resources

Here we filter out all resources that meet any one of these conditions

service:

import:

- selector:

apiVersion: apps/v1

kind: Deployment

excludes:

- .metadata.namespace | endswith("dev")

- .metadata.labels.environment == "integration"

- .spec.template.metadata.labels.environment == "staging"

opslevel:

name: '"\(.metadata.name)-\(.metadata.namespace)"'Lifecycle, Tier, and Owner

The lifecycle, tier and owner fields in the configuration are only validated upon service import not during service preview. Valid values for these fields need to match the Alias for these resources in OpsLevel. To view the valid aliases for these resources you can run the commands account lifecycles, account tiers and account teams.

Tags

In the tags section there are 4 example expressions that show different ways to build the key/value payload for attaching tag entries to your service.

tags:

assign:

- '{"imported": "kubectl-opslevel"}'

- '.metadata.annotations | to_entries | map(select(.key | startswith("opslevel.com/tags"))) | map({(.key | split(".")[2]): .value})'

- .metadata.labels

- .spec.template.metadata.labelsThe first example shows how to hardcode a tag entry. In this case, we are denoting that the imported service came from this tool.

The second example leverages a convention to capture 1..N tags. The jq expression is looking for Kubernetes annotations using the following format opslevel.com/tags.<key>: <value> and here is an example:

annotations:

opslevel.com/tags.hello: worldThe third and fourth examples extract the labels applied to the Kubernetes resource directly into OpsLevel service’s tags

There are 2 types of tag operations in our API that have different semantics - Assign vs Create

Assign - tag with the same key name but with a different value will be updated on the service Create - tag with the same key name but with a different value with be added to the service

Make sure you put your expressions under the desired directive

Tools

The tools section provides 2 example expressions that show you how to build the necessary payload for attaching tools to your services in OpsLevel.

tools:

- '{"category": "other", "displayName": "my-cool-tool", "url": .metadata.annotations."example.com/my-cool-tool"}

| if .url then . else empty end'

- '.metadata.annotations | to_entries | map(select(.key | startswith("opslevel.com/tools")))

| map({"category": .key | split(".")[2], "displayName": .key | split(".")[3],

"url": .value})'The first example shows you the 3 required fields - category , displayName and url - but the expression hardcodes the values for category and displayName leaving you to specify where the url field comes from.

The second example leverages a convention to capture 1..N tools. The jq expression is looking for Kubernetes annotations using the following format opslevel.com/tools.<category>.<displayName>: <url> and here is a example:

annotations:

opslevel.com/tools.logs.datadog: https://app.datadoghq.com/logsRepositories

In the repositories section there are 3 example expressions that show you how to build the necessary payload for attaching repository entries to your service.

repositories:

- '{"name": "My Cool Repo", "directory": "/", "repo": .metadata.annotations.repo} | if .repo then . else empty end'

- .metadata.annotations.repo

- '.metadata.annotations | to_entries | map(select(.key | startswith("opslevel.com/repos"))) | map({"name": .key | split(".")[2], "directory": .key | split(".")[3:] | join("/"), "repo": .value})'The first example shows you the full payload that can be generated. It contains 3 fields name, directory and repo - but the expression hardcodes the values for name and directory leaving you to specify where the repo alias comes from.

The second example shows you that you can just return an alias and the tool will automatically craft the correct payload using a default name and default directory of /.

The third example follows our Tags and Tools convention to capture 1..N repositories. The jq expression is looking for Kubernetes annotations using the following format opslevel.com/repos.<displayName>.<repo.subpath.dots.turned.to.forwardslash>: <opslevel repo alias> and here is a example:

annotations:

opslevel.com/repos.my_cool_repo.src: github.com:myorganization/my-cool-repowould result in the following JSON

{"name": "my_cool_repo", "directory": "src", "repo": "github.com:myorganization/my-cool-repo"}Full Sample Configuration

For additional details on how to generate a sample configuration yourself, see the repository.

version: "1.1.0"

service:

import:

- selector: # This limits what data we look at in Kubernetes

apiVersion: apps/v1 # only supports resources found in 'kubectl api-resources --verbs="get,list"'

kind: Deployment

excludes: # filters out resources if any expression returns truthy

- .metadata.namespace == "kube-system"

- .metadata.annotations."opslevel.com/ignore"

opslevel: # This is how you map your kubernetes data to opslevel service

name: .metadata.name

description: .metadata.annotations."opslevel.com/description"

owner: .metadata.annotations."opslevel.com/owner"

lifecycle: .metadata.annotations."opslevel.com/lifecycle"

tier: .metadata.annotations."opslevel.com/tier"

product: .metadata.annotations."opslevel.com/product"

language: .metadata.annotations."opslevel.com/language"

framework: .metadata.annotations."opslevel.com/framework"

aliases: # This are how we identify the services again during reconciliation - please make sure they are very unique

- '"k8s:\(.metadata.name)-\(.metadata.namespace)"'

tags:

assign: # tag with the same key name but with a different value will be updated on the service

- '{"imported": "kubectl-opslevel"}'

# find annoations with format: opslevel.com/tags.<key name>: <value>

- '.metadata.annotations | to_entries | map(select(.key | startswith("opslevel.com/tags"))) | map({(.key | split(".")[2]): .value})'

- .metadata.labels

create: # tag with the same key name but with a different value with be added to the service

- '{"environment": .spec.template.metadata.labels.environment}'

tools:

- '{"category": "other", "displayName": "my-cool-tool", "url": .metadata.annotations."example.com/my-cool-tool"} | if .url then . else empty end'

# find annotations with format: opslevel.com/tools.<category>.<displayname>: <url>

- '.metadata.annotations | to_entries | map(select(.key | startswith("opslevel.com/tools"))) | map({"category": .key | split(".")[2], "displayName": .key | split(".")[3], "url": .value})'

repositories: # attach repositories to the service using the opslevel repo alias - IE github.com:hashicorp/vault

- '{"name": "My Cool Repo", "directory": "/", "repo": .metadata.annotations.repo} | if .repo then . else empty end'

# if just the alias is returned as a single string we'll build the name for you and set the directory to "/"

- .metadata.annotations.repo

# find annotations with format: opslevel.com/repo.<displayname>.<repo.subpath.dots.turned.to.forwardslash>: <opslevel repo alias>

- '.metadata.annotations | to_entries | map(select(.key | startswith("opslevel.com/repos"))) | map({"name": .key | split(".")[2], "directory": .key | split(".")[3:] | join("/"), "repo": .value})'Try it Out. Give us feedback.

If you have any feedback on how we can improve this Kubernetes integration, feel free to contact us at [email protected]. If you aren’t already using OpsLevel and want to register, please request a demo.

Updated 3 months ago